Question: -6(a) Describe conceptually how an SQL retrieval query will be executed by specifying the conceptual order of executing each of the six clauses.

Answer: – Order of execution of a Query

Now that we have an idea of all the parts of a query, we can now talk about how they all fit together in the context of a complete query.

Complete SELECT query

SELECT DISTINCT column, AGG_FUNC(column_or_expression), …

FROM mytable

JOIN another_table

ON mytable.column = another_table.column

WHERE constraint_expression

GROUP BY column

HAVING constraint_expression

ORDER BY column ASC/DESC

LIMIT count OFFSET COUNT;

Each query begins with finding the data that we need in a database, and then filtering that data down into something that can be processed and understood as quickly as possible. Because each part of the query is executed sequentially, it’s important to understand the order of execution so that you know what results are accessible where.

Query order of execution

- FROMand JOINs

The FROM clause, and subsequent JOINs are first executed to determine the total working set of data that is being queried. This includes subqueries in this clause, and can cause temporary tables to be created under the hood containing all the columns and rows of the tables being joined.

- WHERE

Once we have the total working set of data, the first-pass WHERE constraints are applied to the individual rows, and rows that do not satisfy the constraint are discarded. Each of the constraints can only access columns directly from the tables requested in the FROM clause. Aliases in the SELECT part of the query are not accessible in most databases since they may include expressions dependent on parts of the query that have not yet executed.

- GROUP BY

The remaining rows after the WHERE constraints are applied are then grouped based on common values in the column specified in the GROUP BY clause. As a result of the grouping, there will only be as many rows as there are unique values in that column. Implicitly, this means that you should only need to use this when you have aggregate functions in your query.

- HAVING

If the query has a GROUP BY clause, then the constraints in the HAVING clause are then applied to the grouped rows, discard the grouped rows that don’t satisfy the constraint. Like the WHERE clause, aliases are also not accessible from this step in most databases.

- SELECT

Any expressions in the SELECT part of the query are finally computed.

- DISTINCT

Of the remaining rows, rows with duplicate values in the column marked as DISTINCT will be discarded.

- ORDER BY

If an order is specified by the ORDER BY clause, the rows are then sorted by the specified data in either ascending or descending order. Since all the expressions in the SELECT part of the query have been computed, you can reference aliases in this clause.

- LIMIT/ OFFSET

Finally, the rows that fall outside the range specified by the LIMIT and OFFSET are discarded, leaving the final set of rows to be returned from the query.

Conclusion

Not every query needs to have all the parts we listed above, but a part of why SQL is so flexible is that it allows developers and data analysts to quickly manipulate data without having to write additional code, all just by using the above clauses.

Question: – 6(b) Illustrate how the process of creating first normal form relations may lead by multivalve dependencies? How should the first normalization be done properly so that MVDs are avoided?

Answer: –

Normalization of DatabaseDatabase Normalization is a technique of organizing the data in the database. Normalization is a systematic approach of decomposing tables to eliminate data redundancy(repetition) and undesirable characteristics like Insertion, Update and Deletion Anamolies. It is a multi-step process that puts data into tabular form, removing duplicated data from the relation tables.

Normalization is used for mainly two purposes,

- Eliminating reduntant(useless) data.

- Ensuring data dependencies make sense i.e data is logically stored.

If a table is not properly normalized and have data redundancy then it will not only eat up extra memory space but will also make it difficult to handle and update the database, without facing data loss. Insertion, Updation and Deletion Anamolies are very frequent if database is not normalized. To understand these anomalies let us take an example of a Student table.

| rollno | name | branch | hod | office_tel |

| 401 | Akon | CSE | Mr. X | 53337 |

| 402 | Bkon | CSE | Mr. X | 53337 |

| 403 | Ckon | CSE | Mr. X | 53337 |

| 404 | Dkon | CSE | Mr. X | 53337 |

In the table above, we have data of 4 Computer Sci. students. As we can see, data for the fields branch, hod(Head of Department) and office_tel is repeated for the students who are in the same branch in the college, this is Data Redundancy.

Insertion Anomaly

Suppose for a new admission, until and unless a student opts for a branch, data of the student cannot be inserted, or else we will have to set the branch information as NULL.

Also, if we have to insert data of 100 students of same branch, then the branch information will be repeated for all those 100 students.

These scenarios are nothing but Insertion anomalies.

Updation Anomaly

What if Mr. X leaves the college? or is no longer the HOD of computer science department? In that case all the student records will have to be updated, and if by mistake we miss any record, it will lead to data inconsistency. This is Updation anomaly.

Deletion Anomaly

In our Student table, two different informations are kept together, Student information and Branch information. Hence, at the end of the academic year, if student records are deleted, we will also lose the branch information. This is Deletion anomaly.

Normalization Rule

Normalization rules are divided into the following normal forms:

- First Normal Form

- Second Normal Form

- Third Normal Form

- BCNF

- Fourth Normal Form

First Normal Form (1NF)

For a table to be in the First Normal Form, it should follow the following 4 rules:

- It should only have single(atomic) valued attributes/columns.

- Values stored in a column should be of the same domain

- All the columns in a table should have unique names.

- And the order in which data is stored, does not matter.

Second Normal Form (2NF)

For a table to be in the Second Normal Form,

- It should be in the First Normal form.

- And, it should not have Partial Dependency.

Third Normal Form (3NF)

A table is said to be in the Third Normal Form when,

- It is in the Second Normal form.

- And, it doesn’t have Transitive Dependency.

Boyce and Codd Normal Form (BCNF)

Boyce and Codd Normal Form is a higher version of the Third Normal form. This form deals with certain type of anomaly that is not handled by 3NF. A 3NF table which does not have multiple overlapping candidate keys is said to be in BCNF. For a table to be in BCNF, following conditions must be satisfied:

- R must be in 3rd Normal Form

- and, for each functional dependency ( X → Y ), X should be a super Key.

Fourth Normal Form (4NF)

A table is said to be in the Fourth Normal Form when,

- It is in the Boyce-Codd Normal Form.

- And, it doesn’t have Multi-Valued Dependency.

Question: – 7(a) Discuss the design and implementation issues for active databases.

Answer: –

An active database management system (ADBMS) is an event-driven system in which schema or data changes generate events monitored by active rules. Active database management systems are invoked by synchronous events generated by user or application programs as well as external asynchronous data change events such as a change in sensor value or time.

Onlinestudy.guruà explains Active Database Management System (ADBMS)

Active database management systems support event monitoring. They store events in event history as an event type and time; the former represents any kind of primitive event, while the latter represents time the event occurred. ADMSs clearly define rule semantics such as event consumption policy, event detection and coupling modes along with instance or set oriented semantics.

A common event consumption policy includes the following parameter contexts:

- Cumulative: All instances of primitive event are consumed if a complex event occurs.

- Chronicle: Events are consumed in time order.

- Recent: The latest instances of primitive events that are part of complex events are consumed in time order.

Design and Implementation Issues for Active Databases

The previous section gave an overview of some of the main concepts for specifying active rules. In this section, we discuss some additional issues concerning how rules are designed and implemented. The first issue concerns activation, deactivation, and grouping of rules. In addition to creating rules, an active database system should allow users to activate, deactivate, and drop rules by referring to their rule names. Adeactivated rule will not be triggered by the triggering event. This feature allows users to selectively deactivate rules for certain periods of time when they are not needed. The activate command will make the rule active again. The drop command deletes the rule from the system. Another option is to group rules into named rule sets, so the whole set of rules can be activated, deactivated, or dropped. It is also useful to have a command that can trigger a rule or rule set via an explicit PROCESS RULES command issued by the user.

- The second issue concerns whether the triggered action should be executed before,after, instead of, orconcurrently with the triggering event. A before trigger executes the trigger before executing the event that caused the trigger. It can be used in applications such as checking for constraint violations. An after triggerexecutes the trigger after executing the event, and it can be used in applications such as maintaining derived data and monitoring for specific events and conditions. An instead of trigger executes the trigger instead of executing the event, and it can be used in applications such as executing corresponding updates on base relations in response to an event that is an update of a view.

- A related issue is whether the action being executed should be considered as a separatetransaction or whether it should be part of the same transaction that triggered the We will try to categorize the various options. It is important to note that not all options may be available for a particular active database system. In fact, most commercial systems are limited to one or two of the options that we will now discuss.

- Let us assume that the triggering event occurs as part of a transaction execution. We should first consider the various options for how the triggering event is related to the evaluation of the rule’s condition. The rulecondition evaluationis also known as rule consideration, since the action is to be executed only after considering whether the condition evaluates to true or false. There are three main possibilities for rule consideration:

- Immediate consideration. The condition is evaluated as part of the same transaction as the triggering event, and is evaluated This case can be further categorized into three options:

- Evaluate the condition before executing the triggering event.

- Evaluate the condition after executing the triggering event.

- Evaluate the condition instead of executing the triggering event.

- Deferred consideration. The condition is evaluated at the end of the trans-action that included the triggering event. In this case, there could be many triggered rules waiting to have their conditions evaluated.

- Detached consideration. The condition is evaluated as a separate transaction, spawned from the triggering transaction.

- The next set of options concerns the relationship between evaluating the rule condition and executingthe rule action. Here, again, three options are possible: immediate, deferred, or detached Most active systems use the first option. That is, as soon as the condition is evaluated, if it returns true, the action is immediately executed.

- The Oracle system uses the immediate considerationmodel, but it allows the user to specify for each rule whether the before or after option is to be used with immediate condition evaluation. It also uses the immediate execution The STARBURST system uses the deferred consideration option, meaning that all rules triggered by a transaction wait until the triggering transaction reaches its end and issues its COMMIT WORK command before the rule conditions are evaluated.7

- Another issue concerning active database rules is the distinction between row-levelrules and statement-level rules. Because SQL update statements (which act as triggering events) can specify a set of tuples, one has to distinguish between whether the rule should be considered once for the whole statement or whether it should be considered separately for each row (that is, tuple) affected by the statement. The SQL-99 standard the Oracle system allow the user to choose which of the options is to be used for each rule, whereas STAR-BURST uses statement-level semantics only. We will give examples of how statement-level triggers can be specified

- One of the difficulties that may have limited the widespread use of active rules, in spite of their potential to simplify database and software development, is that there are no easy-to-use techniques for designing, writing, and verifying rules. For exam-ple, it is quite difficult to verify that a set of rules is consistent, meaning that two or more rules in the set do not contradict one another. It is also difficult to guaranteetermination of a set of rules under all circumstances. To illustrate the termination

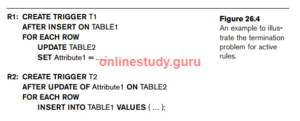

- problem briefly, consider the rules in Figure 26.4. Here, rule R1is triggered by an INSERT event onTABLE1 and its action includes an update event on Attribute1 of TABLE2. However, rule R2’s triggering event is an UPDATE event on Attribute1 of TABLE2, and its action includes an INSERT event onTABLE1. In this example, it is easy to see that these two rules can trigger one another indefinitely, leading to non-termination. However, if dozens of rules are written, it is very difficult to determine whether termination is guaranteed or not.

- If active rules are to reach their potential, it is necessary to develop tools for the design, debugging, and monitoring of active rules that can help users design and debug their rules.

Questions: -Define join dependency and 5NF. Why is 5NF also calledproject-join normal form? Illustrate.

Answer: –

Join Dependency(JD)

Let R be a relation. Let A, B, …, Z be arbitrary subsets of R’s attributes. R satisfies the JD

* ( A, B, …, Z )

if and only if R is equal to the join of its projections on A, B, …, Z.

A join dependency JD(R1, R2, …, Rn) specified on relation schema R, is a trivial JD, if one of the relation schemas Ri in JD(R1, R2, ….,Rn) is equal to R.

Join dependency is used in the following case :

When there is no lossless join decomposition of R into two relation schemas, but there is a lossless join decompositions of R into more than two relation schemas.

Point : A join dependency is very difficult in a database, hence normally not used.

Negative Example :

Consider a relation ACP(Agent, Company, Product)

| ACP : | Meaning of the tuples | ||||

| Agent(A) | Company(C) | Product(P) | ⇒ | Agent sells Company’s Products. | |

| A1 | PQR | Nut | ⇒ | A1 sells PQR’s Nuts and Screw. | |

| A1 | PQR | Screw | |||

| A1 | XYZ | Bolt | ⇒ | A1 sells XYZ’s Bolts. | |

| A2 | PQR | Bolt | ⇒ | A2 sells PQR’s Bolts. | |

The table is in 4 NF as it does not contain multivalued dependency. But the relation contains redundancy as A1 is an agent for PQR twice. But there is no way of eliminating this redundancy without losing information.

Suppose that the table is decomposed into its two relations, R1 and R2.

R1 :

|

R2 :

|

|||||||||||||||||||||||||||||

The redundancy has been eliminated by decomposing ACP relation, but the information about which companies make which products and which agents supply which product has been lost.

The natural join of these relations over the ‘agent’ columns is:

| R12 : | ||

| Agent | Company | Product |

| A1 | PQR | Nut |

| A1 | PQR | Screw |

| A1 | PQR | Bolt |

| A1 | XYZ | Nut |

| A1 | XYZ | Screw |

| A1 | XYZ | Bolt |

| A2 | PQR | Bolt |

Hence, the decomposition of ACP is a lossy join decomposition as the natural join table is spurious, since it contains extra tuples(shaded) that gives incorrect information.

But now, suppose the original relation ACP is decomposed into 3 relations :

- R1(Agent, Company)

- R2(Agent, Product)

- R3(Company, Product)

The result of the natural join of R1 and R2 over ‘Agent’ (already Calculated R12) and then, natural join of R12 and R3 over ‘Company’ & ‘Product’ is –

| R123 : | ||

| Agent | Company | Product |

| A1 | PQR | Nut |

| A1 | PQR | Screw |

| A1 | PQR | Bolt |

| A1 | XYZ | Bolt |

| A2 | PQR | Bolt |

Again, we get an extra tuple shown as by shaded portion.

Hence, it has to be accepted that it is not possible to eliminate all redundancies using normalization techniques because it cannot be assumed that all decompositions will be non-loss. Hence again, the decomposition of ACP is a lossy join decomposition

Positive Example :

Consider the above schema, but with a different case as “if a company makes a product and an agent is an agent for that company, then he always sells that product for the company”. Under these circumstances, the ACP table is shown as :

| ACP : | ||

| Agent | Company | Product |

| A1 | PQR | Nut |

| A1 | PQR | Bolt |

| A1 | XYZ | Nut |

| A1 | XYZ | Bolt |

| A2 | PQR | Nut |

The relation ACP is again decompose into 3 relations. Now, the natural Join of all the three relations will be shown as :

|

|

|

||||||||||||||||||||||||||||||||

| Result of Natural Join of R1 and R3 over ‘Company’ and then Natural Join of R13 and R2 over ‘Agent’and ‘Product’ ⇓ |

||||||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||||||||

Hence, in this example, all the redundancies are eliminated, and the decomposition of ACP is a lossless join decomposition. Hence the relation is in 5NF as it does not violate the property of lossless join.

This article is contributed by Tarun Jangra. Please write comments if you find anything incorrect, or you want to share more information about the topic discussed above.